Price may vary depending on scope of assessment.

Estimates depend on the duration and scope of support, the number of assessment prompts and systems to be assessed, etc. Contact us with a request for more information using the form below.

I am looking to conduct a comprehensive assessment of the security risks associated with our AI-powered system, particularly during its release or update phases.

I am seeking assistance to evaluate the security risks of our AI systems, as my current understanding of their internal mechanisms is limited, leading to concerns regarding potential vulnerabilities.

I am looking to assess the suitability of the roles and authorizations assigned to the AI integrated within our service.

AI Red Team: Specialized Security Assessment Service for AI-Enabled Systems

In recent years, the use of AI has been increasing in various fields. As more platforms implement AI in innovative ways, new vulnerabilities specific to AI developments are emerging. AI Red Team service is designed to account for the nature of AI and its inherent risks and is tailor-made to assess security risks that cannot be covered by conventional assessments.

* Presently we offer assessment services for AI used in LLMs only.

The Standalone Prompt Assessment identifies vulnerabilities of prompts used with AI.

| Assessment Items (Example) | Explanation |

| Prompt Injection | A technique that intentionally sends special questions or commands to an AI system to cause unintended results for the developer. |

| Prompt Leaking | A technique that bypasses an AI's original prompt and causes it to reveal its original prompt either in whole or in part. |

A system using LLM does not operate independently with LLM alone. It is linked with various peripheral systems. Therefore, any system using LLM has not only risks associated with an LLM itself, but also various peripheral risks associated with each of the pieces it is composed of.

The Comprehensive LLM System Assessment assesses the entire system, not just the LLM itself. AI Red Team's approach takes the perspective of problem emergence to assess the following:

1

Our automated assessment testing is an exclusive, innovative development that efficiently and comprehensively identifies vulnerabilities. Our LLM security expert engineers conduct assessments manually to detect specific issues that cannot be covered by automated testing and to delve deeper into identified vulnerabilities.

2

We leverage our extensive expertise in traditional security assessments to uncover vulnerabilities caused by AI across the entire platform, addressing the OWASP LLM Top 10 Vulnerabilities. This comprehensive approach is possible because we evaluate not just the AI, but the entirety of the system implementing it, including every component. This allows us to fully understand all perspectives from which a vulnerability may be discovered.

*Comprehensive assessments are comprised of our AI Red Team service in conjunction with our web application and platform assessment services, to cover the entirety of a system or platform.

3

In response to this dilemma, it's crucial to implement protective measures against new types of attacks, even post-release. To tackle these challenges, we are developing a complementary AI Blue Team service focused on the continuous monitoring of AI applications, scheduled for release in April 2024. When integrated with the AI Red Team service, the AI Blue Team will utilize insights from the Red Team's attack simulations to enhance its monitoring capabilities, based on observed successful attack strategies. This synergy will bolster the security of AI services, leveraging the strengths of both services for comprehensive protection.

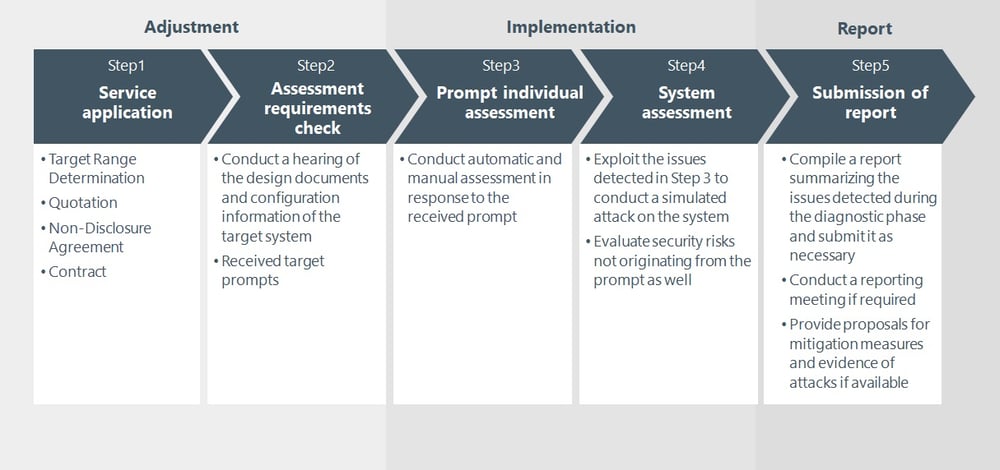

Information about LLMs, APIs, and assessment prompts used in the system will be collected on our initial pre-assessment consultation. Prior to starting assessment activities, we will confirm the assessment scenario and any system configuration necessary in advance.

Price may vary depending on scope of assessment.

Estimates depend on the duration and scope of support, the number of assessment prompts and systems to be assessed, etc. Contact us with a request for more information using the form below.